NGBoost#

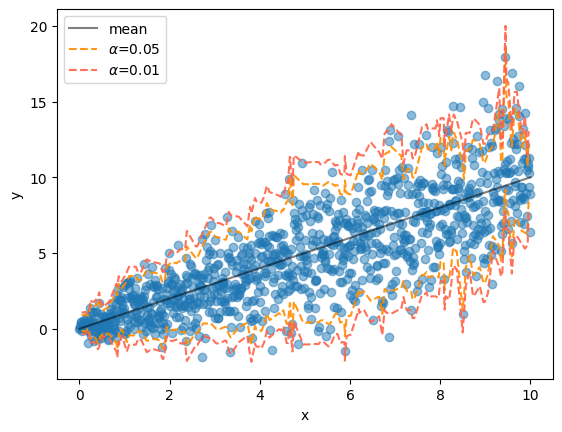

Case 1: 線形データ・不均一分散#

import numpy as np

import matplotlib.pyplot as plt

from scipy.stats import norm

x = np.linspace(0, 10, 1000)

sigma = np.sqrt(x)

y = norm.rvs(loc=x, scale=sigma, random_state=0)

X = x.reshape(-1, 1)

fig, ax = plt.subplots()

ax.scatter(x, y)

ax.plot(x, x, color="black", alpha=.5, label="mean")

ax.set(xlabel="x", ylabel="y")

ax.legend()

fig.show()

from ngboost import NGBRegressor

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

ngb = NGBRegressor().fit(X_train, y_train)

y_pred = ngb.predict(X_test)

y_dist = ngb.pred_dist(X_test)

print('Test MSE', mean_squared_error(y_pred, y_test))

# test Negative Log Likelihood

test_NLL = -y_dist.logpdf(y_test).mean()

print('Test NLL', test_NLL)

[iter 0] loss=2.6949 val_loss=0.0000 scale=1.0000 norm=3.0379

[iter 100] loss=2.1762 val_loss=0.0000 scale=2.0000 norm=3.5274

[iter 200] loss=1.9886 val_loss=0.0000 scale=2.0000 norm=3.2937

[iter 300] loss=1.9128 val_loss=0.0000 scale=2.0000 norm=3.1999

[iter 400] loss=1.8704 val_loss=0.0000 scale=2.0000 norm=3.1320

Test MSE 6.060348262061894

Test NLL 2.3900057406587196

fig, ax = plt.subplots()

ax.scatter(x, y, alpha=.5)

ax.plot(x, x, color="black", alpha=.5, label="mean")

ax.set(xlabel="x", ylabel="y")

ax.legend()

X_test = np.sort(X_test, axis=0)

y_dist = ngb.pred_dist(X_test)

alphas = [0.05, 0.01]

colors = ["darkorange", "tomato"]

for alpha, color in zip(alphas, colors):

upper = norm.ppf(q=1 - (alpha/2), loc=y_dist.params["loc"], scale=y_dist.params["scale"])

lower = norm.ppf(q=(alpha/2), loc=y_dist.params["loc"], scale=y_dist.params["scale"])

ax.plot(X_test[:, 0], upper, alpha=.9, color=color, linestyle="--", label=rf"$\alpha$={alpha}")

ax.plot(X_test[:, 0], lower, alpha=.9, color=color, linestyle="--")

ax.legend()

fig.show()

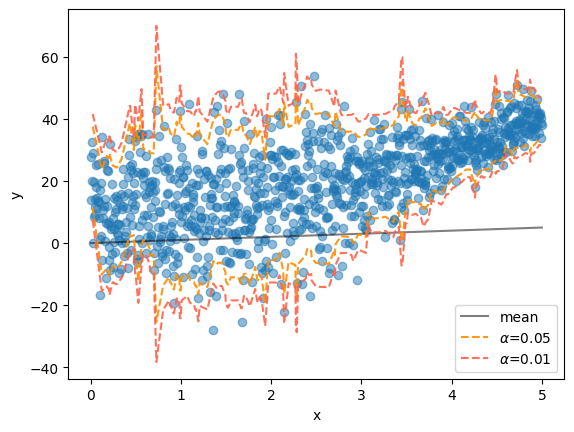

Case 2: 非線形データ・不均一分散#

import numpy as np

import matplotlib.pyplot as plt

from scipy.stats import norm

x = np.linspace(0, 5, 1000)

sigma = (np.sin(x / 1) + 2) * 5

z = 10 + x + x ** 2

y = norm.rvs(loc=z, scale=sigma, random_state=0)

X = x.reshape(-1, 1)

fig, ax = plt.subplots()

ax.scatter(x, y)

ax.plot(x, z, color="black", alpha=.5, label="mean")

ax.set(xlabel="x", ylabel="y")

ax.legend()

fig.show()

from ngboost import NGBRegressor

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

ngb = NGBRegressor().fit(X_train, y_train)

y_pred = ngb.predict(X_test)

y_dist = ngb.pred_dist(X_test)

print('Test MSE', mean_squared_error(y_pred, y_test))

# test Negative Log Likelihood

test_NLL = -y_dist.logpdf(y_test).mean()

print('Test NLL', test_NLL)

[iter 0] loss=4.0725 val_loss=0.0000 scale=1.0000 norm=11.6468

[iter 100] loss=3.7314 val_loss=0.0000 scale=2.0000 norm=16.6875

[iter 200] loss=3.6151 val_loss=0.0000 scale=2.0000 norm=15.9595

[iter 300] loss=3.5691 val_loss=0.0000 scale=2.0000 norm=15.5824

[iter 400] loss=3.5350 val_loss=0.0000 scale=1.0000 norm=7.6140

Test MSE 131.60217825360488

Test NLL 3.731732485918956

fig, ax = plt.subplots()

ax.scatter(x, y, alpha=.5)

ax.plot(x, x, color="black", alpha=.5, label="mean")

ax.set(xlabel="x", ylabel="y")

ax.legend()

X_test = np.sort(X_test, axis=0)

y_dist = ngb.pred_dist(X_test)

alphas = [0.05, 0.01]

colors = ["darkorange", "tomato"]

for alpha, color in zip(alphas, colors):

upper = norm.ppf(q=1 - (alpha/2), loc=y_dist.params["loc"], scale=y_dist.params["scale"])

lower = norm.ppf(q=(alpha/2), loc=y_dist.params["loc"], scale=y_dist.params["scale"])

ax.plot(X_test[:, 0], upper, alpha=.9, color=color, linestyle="--", label=rf"$\alpha$={alpha}")

ax.plot(X_test[:, 0], lower, alpha=.9, color=color, linestyle="--")

ax.legend()

fig.show()